In part two of this series of blog posts, we talked about testing our TEAL smart contract by running automated tests against an actual Algorand Sandbox.

In part three, the last part of this series, we’ll look at how we can take the sandbox out of the equation and use a simulated Algorand virtual machine (AVM) to test our code and discuss the advantages that can bring.

We’ll also discuss defensive testing and learn how to attack our own code before the real hackers even get a crack at it.

To get the code and set up the example project for this blog post, please see part one.

Why simulate the AVM?

In this post we will run our automated simulated tests against a simulated Algorand virtual machine (AVM).

But first, what is the point of simulation?

Well firstly it’s much easier. When we run our tests on a simulated AVM we don’t need a sandbox - so we can do some testing without the hassle of figuring out how to run a sandbox and indeed without having to even wait for the sandbox to boot up.

Simulated testing is much faster. Running our test pipeline running against a normal mode sandbox might complete in minutes or tens of minutes (depending on the size of our contract and the number of tests). Or if we run against a dev mode sandbox it might complete in seconds or ten of seconds (again depending).

Does that seem fast to you? It might seem fast, but running against a simulated AVM is much faster than that. It means your test pipeline can complete in milliseconds or tens of milliseconds, an order of magnitude faster than running against even a dev mode sandbox.

What’s the point? With a test pipeline that completes in milliseconds we can get much faster feedback. That’s a crucial part of the puzzle when trying to create a faster pace of development. And if you are hoping to do test driven development (TDD) you really do need a testing pipeline this fast, so that it can run while you are coding, delivering the near instant feedback that TDD requires. Yes, TDD really is possible with TEAL code and we’ll talk more about that towards the end of this post.

Run the simulated tests

Ok, let’s run our simulated tests:

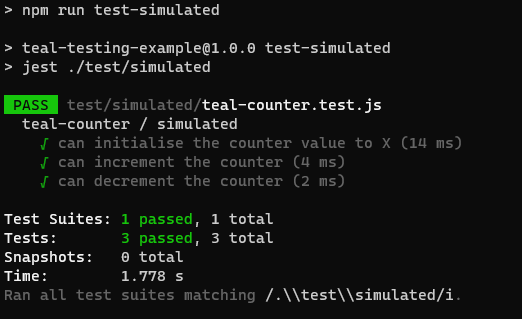

npm run test-simulatedHopefully you’ll see a small list of passing tests for the teal-counter:

An example test

To understand how these simulated tests work, let’s consider one test. Here is the simulated version of the test that we examined in part two:

test("can increment the counter", async () => {

const config = {

"appGlobals": {

"0": {

"counterValue": 15 // Previous value.

}

},

"txn": {

"ApplicationID": 1,

"OnCompletion": 0,

"ApplicationArgs": {

"0": "increment"

}

}

};

const result = await executeTeal(APPROVAL_PROGRAM, config);

expect(success(result)).toBe(true);

expectFields(result.appGlobals, {

"0": {

counterValue: {

type: "bigint",

value: 16,

},

},

});

}); Let’s break this down. Firstly we have a configuration for our simulated AVM:

const config = {

"appGlobals": {

"0": {

"counterValue": 15

}

},

"txn": {

"ApplicationID": 1,

"OnCompletion": 0,

"ApplicationArgs": {

"0": "increment"

}

}

};This configuration sets the state of the AVM before executing our smart contract. Note how it sets the initial value of the counter in the global state and how it requests the increment method in the application arguments.

Next we call a function that executes our TEAL code and waits for the result to come back

const result = await executeTeal(APPROVAL_PROGRAM, config);The result that we get back is the state of the AVM after executing our contract. The first thing we’d like to do with this result is verify that the contract executed successfully and was approved:

expect(success(result)).toBe(true);We do this using the helper function success. This checks that there is a single positive integer on the AVM stack after contract execution, indicating a successful execution of the contract. If it doesn’t find this it throws an exception which fails our test.

We can check the result of our test using the same kind of declarative and expressive helper functions we encountered in part two of this series. In particular, we use the function expectFields to check that our global state contains the counter that we just incremented:

expectFields(result.appGlobals, {

"0": {

counterValue: {

type: "bigint",

value: 16,

},

},

});If expectFields detects that the counter is some other value, not the one we expected, then it throws an exception and fails our test.

You can see the full set of simulated tests here: https://github.com/hone-labs/teal-testing-example/blob/main/test/simulated/teal-counter.test.js

How does the simulation work?

You might be wondering what it is that makes this simulation work? Particularly, what is under the function executeTeal that actually evaluates the TEAL code?

Well the simulator is actually something we have built for ourselves at Hone and have since open-sourced to share with the community. Our teal-interpreter is published on npm and you can find the code on GitHub.

The code for executeTeal loads the TEAL file and evaluates it using the teal-interpreter:

const { execute } = require("teal-interpreter");

const { readFile } = require("../../../scripts/lib/algo-utils");

async function executeTeal(tealFileName, config) {

const code = await readFile(tealFileName);

return await execute(code, config);

}Hone’s teal-interpreter parses the TEAL code and executes it using the passed in configuration. When finished it returns the new state of the simulated AVM so that we can check the result in our automated tests.

Learn more about the teal-interpreter’s configuration format.

The biggest advantage of simulated tests

The biggest advantage of simulated tests for us at Hone is that we can produce a code coverage statistic. That tells us what percentage of the code in our smart contract is covered by our automated tests. The closer we get this metric to 100% the more confident we are that we have completely tested our code.

As far as I know you can’t get this information from the actual AVM, so when running our actual tests against a real AVM we have no idea how much of our code is actually being executed.

Unfortunately the code to compute overall coverage is separate to the actual teal-interpreter (and we haven’t open source it yet). In the future I’d like to build this feature directly into the published teal-interpreter, but for now, if you are interested you can see part of the code below.

After invoking the teal-interpreter on our code for a particular test configuration we then extract the coverage results for this particular test run and save them to a JSON file:

export async function executeTeal(fileName: string,

tealCode: string, config: ITealInterpreterConfig):

Promise<IExecutionContext> {

let interpreter = new TealInterpreter();

interpreter.load(tealCode);

interpreter.configure(config);

try {

await interpreter.run();

return interpreter.context;

}

finally {

const testName = expect.getState().currentTestName;

const testOutputPath = path.join(

"./test-output",

testName.replace(/ > /g, "/").replace(/ /g, "-"),

);

await fs.ensureDir(testOutputPath);

const codeCoverageOutputPath = path.join(

testOutputPath,

"code-coverage.teal",

);

const coverageResults = writeCodeCoverage(

fileName,

interpreter,

fs.createWriteStream(codeCoverageOutputPath),

);

const coverageResultsPath = path.join(

testOutputPath,

"coverage-results.json",

);

await writeFile(

coverageResultsPath,

JSON.stringify(coverageResults, null, 4),

"Utf8"

);

}

}The code-coverage.json JSON file that is saved by the code above maps line numbers to execution counts. It shows how many times each line of code in the TEAL file was executed.

Of course, by itself the code coverage for a single test run isn’t enough. We’d like to know the accumulated code coverage for the entire smart contract through the entire test suite (numerous test runs). So additional code is required to stitch together each of the individual JSON files into an accumulated data structure we can use to calculate exactly what proportion of lines have been executed.

If code-coverage sounds useful to you, please let me know and I can work towards adding this feature directly into the teal-interpreter to make it easy for everyone to use.

Debugging your smart contract

It’s worth nothing that we didn’t actually build the teal-interpreter to support automated testing, even though ultimately that’s where we get the most use out of it.

We actually built the teal-interpreter to support our TEAL debugger extension for VS Code. With this extension installed you can simply hit F5 in VS Code to start debugging your TEAL code.

You don’t need a sandbox and you don’t need to generate a dry run. This is the fastest, easiest and most convenient way to debug TEAL code that currently exists. To make this debugger possible we run our TEAL code on a simulated AVM, our teal-interpreter.

You can’t get away only with simulated tests

At this point it’s probably worth stating, for the record, that simulated testing isn’t enough.

Simulated testing isn’t a replacement for actual testing on a real Algorand Sandbox. Actual testing is necessary, whereas simulated testing is completely optional, that’s why I covered actual testing in part two before covering simulated testing here in part three.

So why do we bother with simulated testing at all? Simulated testing is simply a layer of sophistication above normal sandbox testing and you might not feel the need for it at all. But if you are like me, living and breathing rapid development, you want fast feedback. No, you want near instant feedback!

The faster you can check that your code change has worked (and you haven’t broken anything else in your suite of tests) the faster you can move forward with the next code change. I will go further and say that if you want to do test driven development (TDD) you really do need this level of performance from your automated tests.

Now even if you are using simulated testing, you still can’t exclude actual testing. You still need to be sure that your code is going to work reliably and deterministically on an actual Algorand Sandbox. So you might run simulated tests moment to moment throughout your day and then run actual tests only once every few hours just to be sure that everything will still work on the real blockchain.

Defensive testing

It’s not enough to test the happy path through our code. We can’t just test the path that we hope our users will take. Testing that our smart contract works as it was intended is only a starting point. We must also exercise the edge cases at the extremities of our code.

To be really confident that our code can’t be abused, either accidentally or maliciously, we need to actively attack our own code to try and find ways of breaking it. If you don’t do this someone else will and they probably won’t be friendly. I call this defensive testing because I see it as a way of provoking defensive programming, preemptively designing our code to be resilient in the face of errors and attacks.

We need to make sure that our code, when it is used in the wrong way, responds gracefully and that there are no bugs or loopholes that others might exploit for their own gain (often at our expense).

Once we have tried all the ways of breaking our code (and then fixed any problems that do come up) - this is as sure as we can be that our code can survive the attacks that will surely come once we deploy to Mainnet.

As an example of defensive testing let’s consider the following test:

test("only the creator can increment", async () => {

await creatorDeploysCounter(0);

// Create a temporary testing account.

const userAccount = await createFundedAccount();

// Some other user attempts to increment the counter...

await expect(() => userIncrementsCounter(userAccount))

.rejects

.toThrow(); // ... and it throws an error.

});The name of the test clearly states its purpose. We are not checking something that is allowed to happen, instead we are checking something that is explicitly disallowed.

In the case of the teal-counter, let's say that we have decided that only the creator of the smart contract should be able to invoke these privileged increment and decrement methods.

The test above proves that some other “user” cannot invoke these methods.

The important part is at the end of the test:

await expect(() => userIncrementsCounter(userAccount))

.rejects

.toThrow();We are checking that when userAccount (as opposed to creatorAccount) attempts to invoke the increment method, that the attempt is successfully rejected.

If there are things that a user shouldn’t be able to do with our code, then we must write tests to confirm that they cannot do those things.

Be sure your tests can fail

It’s all good and well to write tests to show that there are no bugs in our code. But what happens when it’s the test code that has the bugs!

Code for automated testing is just code and so it’s not immune from containing its own issues. How can we trust that our code works if we can’t even trust our test code?

The way to know that our tests are working is to know that they can fail. If you have a test that is incapable of failing, that test is not capable of detecting bugs in your code.

Ensuring that our tests work from the start is the domain of test driven development (TDD) which we’ll discuss in a moment. But otherwise how can we prove that tests we already wrote are ok?

To prove that any particular test is capable of failing we can modify the code we are testing in such a way so as to cause the test to fail. Obviously this isn’t a code change we want to commit, we are just running this as an experiment to check that our tests are able to fail and then we can revert the code change.

As an example let’s consider the previous test we looked at called “only the creator can increment”. What is it that makes this test pass? It’s the following TEAL code from our smart contract that rejects (using assert) when the sender of the transaction is not the same as the creator:

txn Sender

global CreatorAddress

==

assert

This is the code that makes this test pass, so removing this code should make the test fail.

Feel free to try this out for yourself. First, make sure you start with passing tests. Then go into the TEAL file and delete those lines of code. Now run the tests again, you should find that the test in question fails, it fails because we removed the code that makes it pass. Removing that code will most likely cause other tests to fail as well.

Running this experiment has served its purpose: we are now sure that our test can fail so now we can revert the code change and go back to passing tests.

What happens if the test doesn’t fail? If we remove or modify that code that should cause the test to fail, but the test doesn’t fail, then we have a big problem.

It means that our code can change (in a bad way) and it won’t trigger our early warning system: our automated tests. If you discover tests that can’t fail, something is wrong with your testing pipeline. You might have bugs in your test code that need to be figured out, maybe something is misconfigured or maybe you are even testing the wrong code.

Whatever the case you need to get to the bottom of it. Maintaining automated tests is expensive enough already, but if you are maintaining broken automated tests - that’s just a waste of time.

Debugging your tests

Making sure our tests work is just as important as making sure our regular code works. Here are some debugging tips to help you find problems and understand what’s happening in both your TEAL code and your test code.

Before you even get to writing test code you should have some kind of testbed with which you can deploy your smart contract and invoke methods against it. That’s actually what we did way back in part one!

Do you remember invoking scripts like npm run deploy and npm run increment-counter. Using a testbed (really just a code file that exercises the code you want to play with) is a better way to do debugging than working in automated tests. You can add whatever logging you want and console.log is your best friend for printing out the results, seeing the intermediate data and printing the state of the blockchain. You can pass pretty much any string or data structure into it and it will dump it to your terminal.

I take it much further than this and rely on higher level helper functions like dumpData, dumpGlobalState anddumpTransaction all of which can display formatted data for easy inspection in the terminal. These functions allow me to easily print in a readable format the global state of the contract, the details of a recent transaction, or really just any old object if I’m using the generic dumpData function.

Need to get output from your TEAL code to check that it’s ok? Write it to the global state then use dumpGlobalState from JavaScript to show you that state.

When it comes to testing, your tests shouldn’t do spurious logging, that just gets in the way of reading the output of your testing pipeline. However it’s totally ok to have debugging logging in your tests during development, just make sure you remove that logging before you commit your code (otherwise you’ll annoy your teammates).

In fact, using logging in tests is one of the primary ways that I create expectations for my tests. For instance I’ll like start by creating a test that has no expectations:

test("creator can increment teal counter", async () => {

const initialValue = 15;

const { appId, creatorAccount } =

await creatorDeploysCounter(initialValue);

const { txnId, confirmedRound } = await creatorIncrementsCounter();

// … expectations to go here

});Then I’ll add calls to dumpGlobalState to see the state before and after:

test("creator can increment teal counter", async () => {

const initialValue = 15;

const { appId, creatorAccount } =

await creatorDeploysCounter(initialValue);

await dumpGlobalState();

const { txnId, confirmedRound } = await creatorIncrementsCounter();

await dumpGlobalState();

});I can inspect the console now to see what the state looked like before and after the increment operation. I’m letting my code tell me what happened.

I then replace it with a call to expectGlobalState which bakes the expectation into the test:

test("creator can increment teal counter", async () => {

const initialValue = 15;

const { appId, creatorAccount } =

await creatorDeploysCounter(initialValue);

const { txnId, confirmedRound } = await creatorIncrementsCounter();

await expectGlobalState({

// ... expected values go here ...

});

});To learn the details of the transaction added to the blockchain I use the same process. This time though I use dumpTransaction to tell me what the transaction looks like. I then replace dumpTransaction with expectTransaction, again to bake the expectation into the test.

Abracadabra, no fuss at all, I have a working test. I didn’t even break a sweat, because I let the code tell me how to write the test.

One last debugging tip: Use Jest’s -t argument to isolate a particular test or set of tests. For example I could just focus on the test above like this:

npm run test-actual -- -t “creator can increment teal counter”Everything after the -- here are arguments passed through to Jest. In this case we are instructing Jest to only run that single test.

This is great when you need to focus on one test at a time, including those times when you change your code and break all your tests! The best way to deal with that situation is to work through your tests one by one so as not to be overwhelmed by the wall of failing tests.

Test driven development

So where does test driven development (TDD) fit into this picture?

TDD as you probably know is the practice of writing broken tests before you write the code that makes them pass, also known as test-first.

Doing TDD with TEAL code is absolutely possible and I have tried it myself. Test-first is a good practice with many benefits:

- It helps you to bullet-proof your code

- It leads to very good test coverage

- It forces you to think about outcomes before writing code

- It ensures that your tests work (i.e. that they are capable of failing)

For all its benefits, it has to be said that test-first can be a much slower process than test-later.

If you are doing test-later you can evolve your code significantly before you create your automated tests. This saves much time, because you don’t have to spend the time to keep your tests working during the early prototyping of your smart contract. Once you are happy your contract code has stabilized and no longer is changing quite as frequently, now is a good time to write your automated tests.

Test-later allows you to cheat with your testing. You can let your code show you what the results are and then you can add the correct expectations into the test. This is exactly what we did in the previous section!.

This process of exercising your code and visualizing the results will help you spot bugs. You can then do a form of TDD if you like… writing a failing test and then fixing the bug to make the test pass.

Test-first or test-later, it doesn’t really matter how you get to automated testing, so long as the end result is good test coverage in the places where you need it.

Conclusion

This has been the last part in a three part series of blog posts on automated tests for Algorand smart contracts.

In this post we learned how to run automated tests against a simulated Algorand blockchain, which is faster and more convenient than running against the Algorand Sandbox like we did back in part two.

We also talked about debugging our code and tests, using defensive testing to make sure our code can gracefully handle bad situations and finally we touched on test driven development.

About the author

Ashley Davis is a software craftsman and author. He is VP of Engineering at Hone and currently writing Rapid Fullstack Development and the 2nd Edition of Bootstrapping Microservices.

Follow Ashley on Twitter for updates.

About Hone

Hone solves the capital inefficiencies present in the current Algorand Governance model where participating ALGO must remain in a user’s account to be eligible for rewards, eliminating the possibility for it to be used as collateral in Algorand DeFi platforms or as a means to transfer value on the Algorand network. With Hone, users can capture governance-reward yield while using their principal–and the yield generated– by depositing ALGO into Hone and receiving a tokenized derivative called dALGO. They can then take dALGO and use it as collateral in DeFi or simply transfer value on-chain without sacrificing governance rewards.